In the digital landscape, ensuring your website is visible on search engines like Google is crucial for attracting visitors. However, certain factors can prevent search engines from indexing your content properly. This phenomenon often arises from misconfigurations in files such as robots.txt, which dictates how search engines crawl your site. If search engines are unable to access your content due to these limitations, it significantly hinders your site’s visibility, making it essential to understand and address these blocking risks indexing.

Several obstacles can affect how search engines perceive your website, ranging from minor technical errors to major service interruptions. Issues like incorrect directives in your robots.txt file, broken links, or even temporary server downtime can create barriers that block search engines from indexing your pages effectively. Recognizing these potential pitfalls is the first step toward ensuring that your content reaches its intended audience. By diagnosing these problems, you can take proactive measures to rectify them and enhance your site’s overall performance.

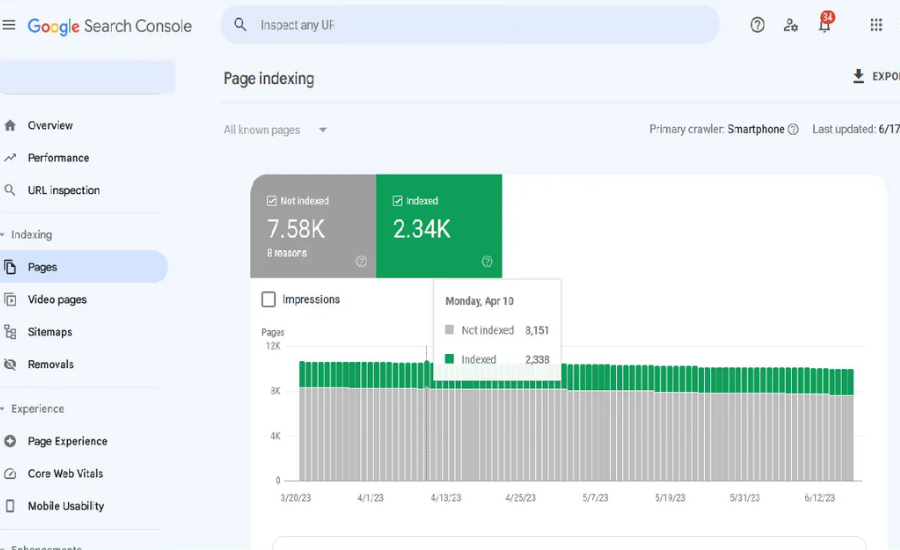

To mitigate these issues and improve your site’s discoverability, implementing a strategic approach is vital. Begin by thoroughly reviewing your website’s configuration and server status, ensuring there are no inadvertent restrictions on crawling. Additionally, employing tools like Google Search Console can provide insights into how your site is indexed and alert you to any issues. With these strategies, you can effectively navigate the complexities of blocking risks indexing, leading to improved visibility and a better user experience for your audience.

Comprehending Blocking Risks in Indexing: Ensuring Search Engine Visibility

When building a website, your primary goal is to make it accessible to as many users as possible. To achieve this, search engines such as Google must successfully crawl and index your site so that it appears in relevant search results. The process of indexing allows search engines to analyse and categorise your content, making it available to users searching for similar information. However, there are certain challenges that may interfere with this process, creating what is known as “blocking risks indexing.” These obstacles can prevent your website from being fully visible in search engine results.

Various factors can contribute to blocking risks indexing, often stemming from technical issues or misconfigurations on your site. Common causes include incorrect settings in the robots.txt file, which can unintentionally restrict search engines from accessing specific parts of your site. Additionally, broken links, missing metadata, or improper use of tags can all contribute to this issue. When search engines encounter these barriers, they are unable to index your content properly, which in turn affects your website’s discoverability and overall ranking.

To avoid these complications and ensure that your website is indexed correctly, it’s important to regularly audit your site for potential issues. By reviewing your robots.txt file, monitoring your site’s performance using tools like Google Search Console, and fixing any technical errors promptly, you can minimise the risk of blocking risks indexing. These proactive steps will help ensure that your website is fully accessible to search engines and can be easily found by your target audience.

Understanding the Concept of Blocking Risks in Indexing

Think of your website as a store where certain rooms are visible to visitors, while others remain hidden behind closed doors. Search engines, much like visitors, can only explore and display what is accessible to them. When key areas of your website are restricted or blocked, these “closed doors” prevent search engines from discovering your content. This directly impacts how much of your site appears in search results, reducing the chances of users finding you online. These obstacles are referred to as “blocking risks indexing,” which can adversely affect your website’s visibility in search engine results.

When parts of your site are blocked from search engines, whether due to technical settings or incorrect configurations, it severely limits the indexing process. This means valuable content on your website remains unseen, much like visitors missing out on essential rooms in the store. Various issues, such as poorly set permissions, blocked URLs, or unoptimized files, can cause these barriers. Understanding and identifying these issues is crucial in preventing the risks associated with blocking risks indexing.

To maximise your website’s reach, it’s important to keep these barriers in check and ensure all relevant sections are available to search engines. Regularly reviewing your website’s settings, fixing errors, and optimising accessibility can help avoid blocking risks indexing. By keeping all the “doors” open, search engines can fully index your site, improving your chances of being seen and increasing your site’s traffic.

Common Causes of Blocking Risks Indexing

There are several reasons why parts of your website might be blocked from being indexed by search engines, affecting its visibility. This file acts as a guide, informing search engines which areas of your website are open for crawling and which are off-limits. If this file is misconfigured, it could inadvertently block important sections of your site, leading to gaps in indexing. These blocking risks indexing issues could prevent key pages from appearing in search results, impacting your site’s overall visibility.

The Role of Meta Tags and Headers in Blocking Indexing

Meta tags and headers play a crucial role in giving search engines specific instructions on how to handle your web pages. For instance, the ‘noindex’ tag directs search engines to exclude a page from being displayed in search results. While this can be useful when intentionally applied, errors in setting up these tags may result in hiding parts of your site unintentionally. If not carefully managed, these mistakes can block search engines from accessing crucial content, thereby affecting the indexing process. Ensuring that your meta tags are correctly configured is essential for avoiding blocking risks indexing.

Linking Errors and Canonical Tags

Another potential issue arises from incorrect use of canonical tags, which are used to guide search engines on how to handle duplicate or similar content. Canonical tags tell search engines which version of a page is the primary one to index. However, if these tags are incorrectly applied, search engines may become confused and overlook vital pages, leading to a failure in indexing key content. Addressing linking errors and properly setting up canonical tags is critical to avoiding these blocking risks indexing.

Server Downtime and Indexing Challenges

Server reliability plays a significant role in ensuring that search engines can regularly access and index your website. If your server frequently experiences downtime or errors, search engines may struggle to visit your site and properly index its pages. Prolonged server issues can lead to gaps in your site’s indexing, further contributing to blocking risks indexing. To prevent these issues, maintaining a stable server environment is crucial.

Complex Website Designs and Indexing Difficulties

Websites that utilise complex coding elements such as JavaScript or AJAX may offer enhanced user experiences but often present challenges for search engines. These coding languages can be difficult for search engines to interpret, leading to incomplete indexing of your content. If search engines cannot fully understand or access your site, critical information may go unnoticed, causing indexing problems. Simplifying your website’s code or providing alternative ways for search engines to access the content can mitigate these blocking risks indexing.

Review Your Robots.txt to Avoid Blocking Risks Indexing

The robots.txt file serves as an essential tool in guiding search engines through your website, instructing them on which parts they can access and which to ignore. However, if this file is incorrectly configured, it can unintentionally block critical sections of your site, preventing search engines from indexing those areas. Regularly reviewing your robots.txt file ensures that important pages are visible to search engines, minimising blocking risks indexing and improving your site’s discoverability.

Use Meta Tags Correctly to Prevent Indexing Errors

Meta tags, such as the ‘noindex’ tag, are crucial for controlling which pages appear in search engine results. While these tags are useful for intentionally excluding certain content from being indexed, improper use can lead to accidental exclusion of important pages. Only apply the ‘noindex’ tag to content that you explicitly don’t want to appear in search results, ensuring that valuable parts of your site are not hidden. Correct usage of meta tags is key to avoiding blocking risks indexing.

Verify Canonical Tags to Direct Search Engines Properly

Canonical tags help search engines identify the primary version of similar or duplicate content on your website. If these tags are misapplied, search engines may overlook key pages, resulting in indexing issues. Ensuring that your canonical tags accurately point to the correct pages is vital for guiding search engines through your site and preventing blocking risks indexing from affecting your search visibility.

Maintain a Healthy Server to Support Indexing

Your website’s server plays a significant role in the indexing process. If your server experiences frequent downtime or slow loading times, search engines may struggle to access your site, hindering their ability to index your content. Regularly monitoring and maintaining your server’s health is essential for keeping your website available and reducing blocking risks indexing, ensuring that search engines can easily crawl and index your site.

Simplify Your Site’s Code for Better Indexing

Complex coding elements like JavaScript or AJAX can enhance the user experience but may pose challenges for search engines trying to index your content. If these elements are not set up in a search-engine-friendly manner, important sections of your website might be missed. Simplifying your site’s code or providing alternative ways for search engines to access the content can significantly reduce blocking risks indexing, ensuring that all crucial information is properly indexed and displayed in search results.

Monitoring Website Indexing to Prevent Blocking Risks

Regularly monitoring your website’s performance using tools like Google Search Console is essential for ensuring that everything is running smoothly. This tool provides valuable insights into which parts of your site have been successfully indexed and highlights any issues that may be preventing search engines from fully accessing your content. By staying on top of these updates, you can address any problems quickly and prevent blocking risks indexing, ensuring that your website remains easily discoverable by both search engines and users alike.

Keeping an eye on your website’s indexing status helps you identify potential barriers that could hinder your visibility in search results. Whether it’s technical issues or misconfigurations, addressing these obstacles promptly allows search engines to efficiently crawl and index your site. Proactive monitoring ensures that your content is accessible, helping to avoid blocking risks indexing and ensuring that your audience can find your site with ease.

Utilising Google Search Console for Indexing Insights

Google Search Console is an invaluable free tool that provides users with a clear perspective on how Google perceives their website. It offers detailed insights into which pages are indexed, along with identifying any that are not, and the specific reasons behind their non-indexation. By leveraging this tool, you can effectively monitor your site’s performance and identify any blocking risks indexing that may prevent your content from being discovered by search engines.

Employing Site Audit Tools for Comprehensive Analysis

In addition to Google Search Console, various site audit tools like Screaming Frog, Ahrefs, and SEMrush can be instrumental in understanding how search engines interact with your site. These tools can simulate search engine crawling, helping you detect potential indexing problems such as broken links or server errors. Regularly using these tools allows you to uncover issues that could be impacting your website’s visibility and address them proactively, thereby minimising blocking risks indexing.

Conducting Manual Checks for Optimal Performance

Performing periodic manual checks of your website’s critical elements, including the robots.txt file, meta tags, and canonical links, is essential for maintaining optimal indexing status. By reviewing these components, you can ensure that they are correctly configured and free from errors that might lead to unexpected indexing challenges. This hands-on approach not only helps you catch potential issues before they escalate but also plays a significant role in safeguarding your site against blocking risks indexing, ensuring your content remains accessible to search engines.

Strategies for Improving Website Indexing

To ensure that your website is effectively indexed by search engines, it’s crucial to regularly review and optimise your robots.txt file. This file serves as a directive for search engine crawlers, indicating which sections of your site should be accessed and indexed. By keeping this file updated, you can prevent accidental blocking of important pages while allowing search engines to efficiently navigate your site. A well-configured robots.txt file can significantly reduce blocking risks indexing and enhance your site’s visibility in search results.

Utilising Meta Tags and HTTP Headers Effectively

The proper use of meta tags and HTTP headers is essential for guiding search engines in how to interact with your content. When applying directives such as ‘noindex’, it’s important to do so judiciously, ensuring they are only used on pages that you genuinely wish to exclude from search results. Misconfiguration can lead to valuable content being hidden from search engines, which can hinder your site’s performance. By carefully managing your meta tags and headers, you can minimise blocking risks indexing and optimise the indexing process.

Ensuring Correct Implementation of Canonical Tags

Canonical tags play a vital role in helping search engines determine the primary version of content, particularly when duplicate content exists. It is important to verify that all canonical tags point accurately to the correct URLs. Any mismatches or incorrect implementations can confuse search engines and result in missed indexing opportunities. By routinely checking and rectifying these tags, you can enhance your site’s indexing efficiency and reduce blocking risks indexing, ensuring that your preferred content is prioritised.

Monitoring and Improving Server Performance

A well-performing server is crucial for maintaining a website’s accessibility to search engines. Regular monitoring of your server’s performance helps you identify issues such as downtime or slow response times, which can impede search engine crawling and indexing. By optimising server performance, you can ensure that search engines have uninterrupted access to your site, thus enhancing the overall indexing process. This proactive approach not only improves user experience but also helps in mitigating blocking risks indexing, ensuring your content remains discoverable.

Optimising Content for Search Engine Crawling

If your website relies heavily on JavaScript or AJAX, it’s essential to adapt your content for better crawling capabilities. Consider implementing server-side rendering or other techniques that make your content more accessible to search engines. When search engines struggle to read your content due to complex coding, it can lead to incomplete indexing and reduced visibility in search results. By prioritising effective content delivery methods, you can ensure that search engines can easily index your material, minimising blocking risks indexing and enhancing your site’s performance in search results.

Conclusion

In conclusion, addressing blocking risks indexing is vital for ensuring your website’s visibility in search engine results. By optimising your robots.txt file, correctly implementing meta tags and canonical tags, and maintaining a reliable server, you can significantly enhance your site’s indexing capabilities. Regular monitoring with tools like Google Search Console and conducting thorough audits will help identify potential obstacles that may hinder search engines from effectively crawling your content. Ultimately, implementing these strategies not only improves indexing but also enhances user experience, allowing your target audience to easily discover your valuable content.

FAQs

Q1. What is blocking risk indexing?

A. Blocking risks indexing refers to issues that prevent search engines from properly crawling and indexing a website, affecting its visibility in search results.

Q2. What causes blocking risks indexing?

A. Common causes include misconfigurations in the robots.txt file, incorrect meta tags, broken links, server downtime, and complex coding that search engines struggle to interpret.

Q3. How can I check if my site has indexing issues?

A. You can use tools like Google Search Console to identify which pages are indexed and uncover any issues that may be preventing proper indexing.

Q4. Why is the robots.txt file important?

A. The robots.txt file guides search engines on which parts of your website they can access and index. Misconfigurations can lead to accidental blocking of important pages.

Q5. How can I improve my site’s indexing?

A. Regularly audit your website for technical issues, optimise your robots.txt file and meta tags, maintain a reliable server, and ensure your site’s content is easily crawlable.

Stay In Touch For More Updates And Alerts: Discover Tribune!